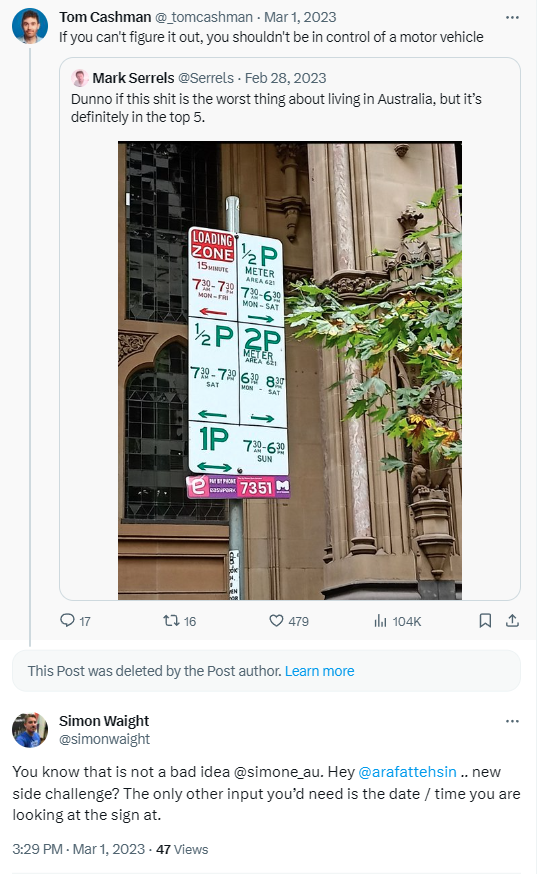

About 10 months (March, 2023) ago, I got a mention in a tweet from one of my good friends and our local community leader, Simon Waight. It was about solving a complex parking problem with Computer Vision. I bookmarked this interesting challenge and made it as a part of my want-to-do list. After a month, I picked this up and started to think as how I would like to solve this problem. I faced several problems which I have mentioned later in this blog post but finally managed to create this small yet useful cross-platform app (or copilot or gpt or whatever you want to call it) for everyone living in Australia. While there’s a big need of improvements and additional features, it is live and opensource. You may consider it as a new year gift from me.

Learning from my failures

As I mentioned earlier, it was just a tweet which triggered this idea. However, there were many hiccups in its development. Someone rightly said that success never comes easy. As the world is going through a rapid change of how we develop, use and interact with technology, picking up these challenges can be daunting, time-consuming and hard. Initially, as soon as I picked it up, I opted for the latest and finest technology of that time for Computer Vision. I chose Project Florence by Microsoft which is a large foundational model for Vision. The reason why I chose this is because I wanted to use the dense captions capability of this model.

However, after trying out different techniques, I failed as it couldn’t do the proper OCR of the parking signs. Later on, I was suggested by one of the product team members to use Form Recognizer (which is now called Azure AI Document Intelligence). I tried with that too but couldn’t get any success either. The problem I was getting with Form Recognizer was that the text was not correct all the time. It used to be messed up sometimes. To combat this problem, I decided to use a combo approach which means that I used Form Recognizer for OCR (with a bit of gibberish results) and then corrected them with GPT-3. This worked for a few signs but failed miserably for others. Basically, the success rate was 50%. I wanted something robust that would solve this problem for us without any training of a custom dataset.

Finally, the time came when I witnessed the launch of OpenAI’s GPT-4 with Vision followed by the support for the same in Azure OpenAI Service. It felt like my wish came true with time, but then I had to wait because I had so many other things planned esp. the year end tasks and the family holidays. 🎈

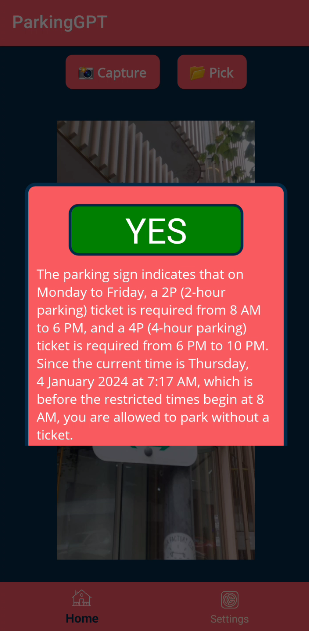

Considering all of it, I kept a dedicated slot between my personal time to work on this as I really wanted to finish it off before my work begins in January 2024. So, here I am, I think I achieved what I planned. I created a ParkingGPT for all of my fellow Aussies to decide when they can park or not. It’s very initial stage and currently I have just tested with the parking signs within New South Wales. However, if you are based in any other state then please feel free to give it a shot and let me know your feedback. 🙏

ParkingGPT

ParkingGPT enables you to decide whether you want to park or not, all using the power of Vision AI & LLM. This uses the newly released GPT-4 Turbo with Vision. With ParkingGPT, you can easily identify parking signs and make informed decisions about where to park. Whether you’re a driver looking for a convenient parking spot or a parking enforcement officer looking to enforce parking regulations, ParkingGPT is your AI parking copilot.

This app just has two main features. It either allows you to capture a photo of a parking sign from your camera or it lets you pick it up from the photo gallery.

|

|

|

Technical Implementation

It is quite straight forward as you can see from the repo. I have tried my best to follow the best practices for .NET MAUI and how you usually develop apps. There may be certain negative cases which I may have missed, such as writing an effective prompt of ignoring non-parking sign images but overall, it works well.

This app takes an image (either from the camera or from the gallery) and then converts it into base64 encoded string. This is then sent to OpenAI API’s for processing and returned with the response. This app is good to work with both Azure OpenAI and OpenAI. All you need is the API endpoint key for it to run. The API endpoint key stays in your app’s specific storage which means that you do not have to enter it again and again.

Some common FAQs

Is it published on a Google Play Store?

No, but you can request me for the .apk package by just commenting on this post and sideload it to your Android for now. If there’s much interest, I can think of publishing it to the store.

Is it published on an Apple App Store?

No. I do not own a Macbook which restricts me from creating a package (or .ipa) for this app. The developer program subscription is too expensive for a hobbyist but I will see.

Does it work seamlessly with multiple parking signs?

I have tried 2 to 3 ones and it works fine. However, I am not sure how it would react to the more than 2 or 3 signs. I am still testing it.

Is it smart enough to make decision based upon the no parking signs and parking signs?

Not for now. I will see this for future.

If you are a developer then the code is there for you as opensource to see how I have done it. As a part of my mission to empower every developer with necessary applied AI skills, I have done this in my personal time. Please do not forget to star ⭐ the repo if you like and share it among your friends so others can get an idea on how to create small but useful utilities like ParkingGPT.

Until next time.