The world is changing rapidly and so are the applications that we use every day at our workplace, home, gym, playground and the likes. Gone are the days when applications were just software that ran on your workstations or mobile phones with limited functionality and interaction. Today, we have applications that can talk to us, understand us and help us in various ways. These are the AI First Apps, the applications that use artificial intelligence (AI) as their core technology and functionality, rather than as an add-on or a feature.

AI First Apps are not new but the advent of ChatGPT has revolutionalised the way they are built and used. The accessibility of large language models (LLMs) has taken the internet by storm. As someone who has been building chatbots for more than 5 years now, I can safely say that conversational apps which used to take months, now take just a few days to deliver. Not just chatbots; individuals, startups or even large enterprises are infusing these LLMs capabilities into various types of apps such as grocery shopping, services, streaming, ride sharing and so on.

Now you may ask a question how do I use AI as a core of my app? There can be multiple answers to this question but let’s just focus on two of them.

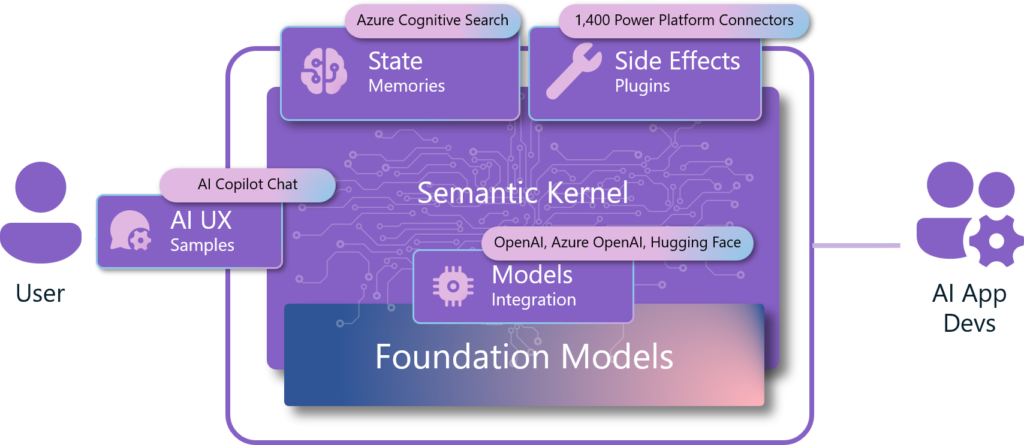

Broadly, there are two ways as how you use AI as a core of your app. One way is to create your own wrappers for the exposed LLMs APIs (such as OpenAI, Azure OpenAI, Hugging Face etc.) and then consume them into your applications. The other way is to use some kind of SDK to do the heavy-lifting for you.

Regarding the first way, the reliability and scale really depends upon the scope of your application. If it is a simple app that takes an input, processes it and returns an output then this approach may suit your needs. Whereas, for the second approach, you do not have to take care of the underlying architecture of how the information is being processed, the target schema and much more. All you have to focus is your own business logic. This way, you will have better time to achieve your goal. One of the tools to achieve this is Semantic Kernel.

Semantic Kernel

Microsoft’s Semantic Kernel can be considered as a big box of Lego blocks. Each block represents a different AI service, like understanding language, answering questions or even creating art. As a developer, you can pick and choose which blocks you want to use to build your application, just like you would build a Lego model. You can combine these blocks in any way you want to create something unique. The best part is that Semantic Kernel is open-source. This means that not only can you use all these blocks for free, but you can also create your own blocks and share them with others.

As a lot of folks have already spoke and written quite well about it, I won’t go into the similar kind of details. Rather, I’ll give you a different perspective. A perspective for which this whole package came into being. In this post, I will be talking about a cross platform app (aka Guess What?) which I built using .NET MAUI 8 and Semantic Kernel. This will give you a perspective to build your own apps by keeping AI at the core of its functionality.

If you are getting started with the Semantic Kernel then head over to this notebook as it will guide you from the start to something meaningful.

Now, let’s talk about the high-level architecture of the app. We’ll start with .NET MAUI first as that’s the front-end and then we’ll deep dive into some of the concepts as well as the specifics of Semantic Kernel.

Guess What? A .NET MAUI 8 App

Whenever any new technology comes in, some great minds come together and start sharing their insights, learning experiences and gotchas around the same. However, most of the times, it always start with Hello World kind of a situation and just ends there. I thought that rather than taking just a notebook route, I should build a complete solution. This way developers will see the end-to-end experience of an app.

Not to ignore the importance of notebooks as it helps us learn these technical concepts faster within just one window, hence I created one too.

Note: I had to polish my XAML skills as it’s been a few years since I last created something using similar technologies such as Silverlight, WPF, UWP etc. For that, I really want to call out James Montemagno here for his best tutorials ever. If you’re keen on learning .NET MAUI along with so much other useful things around .NET then I’d highly recommend you to at least subscribe to his channel. I can assure you it takes a lot of time to produce the quality content.

I decided to create an app using .NET MAUI because I wanted to keep it cross platform as well as my focus was on the mobile so I can extend it later on with other capabilities.

But… what does this app do?

Guess What? is a cross platform app that enables you answer riddles and guess the landmarks. These riddles and landmarks are generated by AI. All powered by Semantic Kernel and Azure OpenAI / OpenAI’s models such as GPT-4, DALL-E 2 and ada.

Before I release it to public, I am working on its documentation so it can be easier to navigate. If you’re keen on getting an early access, please reach out and let me know how you can help and I will add you to the private repo.

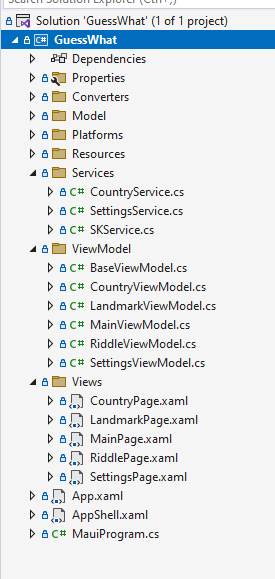

The app does not have any complex structure, it follows the same .NET best practices with MVVM pattern. Dependency Injection capability has made it a lot easier, so it was definitely a plus for me. So below screenshot will give you a little clear picture of what I have done so far:

Services

In addition to the usual views and view models, there are 3 types of services. CountryService is the service that loads top 100 countries based upon their count of population.

SettingsService on the other hand is just to write settings such as your Azure OpenAI API key, endpoint, deployment name etc. into their persistent storage of the application.

Back to Semantic Kernel for Riddles and Landmarks

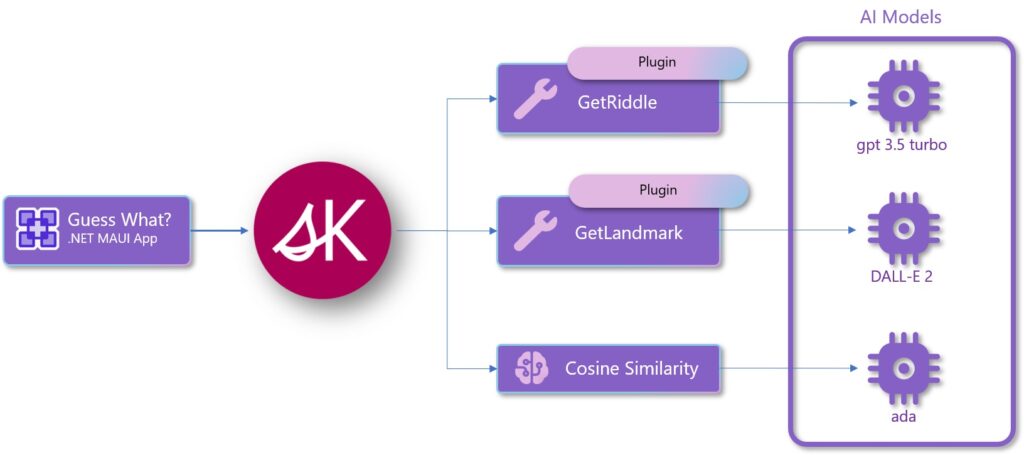

So, after discussing all the other details, let’s get back to the main topic of this post. How did I use Semantic Kernel along with .NET MAUI app, what works and what did not. As I wrote earlier, I wouldn’t go into the details of each and every component of this SDK rather, I will try to cover what I have used in this app. Here’s the high-level architecture of the app:

Semantic Kernel has a lot of components, or you can say building blocks. Out of many essential building blocks, AI Plugins are one of them.

I’ve tried my best to keep the description of these new concepts easy enough so that any junior developers can understand it well.

AI Plugins

AI plugins are like a set of instructions or tasks that your application can perform. You can think of them as a recipe book for your application. Each recipe (or plugin) contains a list of ingredients (or functions) and steps (or triggers and actions) that your application can use to accomplish a specific task. For example, let’s say you’re building a chatbot and you want it to be able to answer questions about the weather. You could create a plugin that contains a function for fetching the current weather data from a weather API and another function for formatting that data into a user-friendly message.

Plugins are the fundamental building blocks of Semantic Kernel and can interoperate with plugins in ChatGPT, Bing, and Microsoft 365. With plugins, you can encapsulate capabilities into a single unit of functionality that can then be run by the kernel. Plugins can consist of both native code and requests to AI services via prompts.

This means any plugins you build can be exported so they are usable in ChatGPT, Bing, and Microsoft 365. This allows you to increase the reach of your AI capabilities without rewriting code. It also means that plugins built for ChatGPT, Bing, and Microsoft 365 can be imported into Semantic Kernel seamlessly.

Prompts

Think of Prompts as the individual steps in a recipe. Each step (or prompt) tells your application what to do and how to do it. For example, let’s say you’re building a chatbot and you want it to be able to answer questions about the weather. One of your Prompts might be a step that fetches the current weather data from a weather API. Another Prompt might be a step that takes that weather data and formats it into a user-friendly message.

Prompts are used in plugins, which are like the full recipes in your recipe book. Each plugin can contain multiple Prompts and each prompt can be triggered by certain events or conditions.

So, if you’re building an application and you want it to have some cool AI features, Prompts give you the tools to do that. They’re like the individual steps that make up your app’s recipe for success.

This app contains has two essential features. One is Riddles and the other one is Landmarks. Both of these features are using LLMs capabilities to generate the riddles and landmark images.

As demonstrated in the below video, in the Riddles section, you just have to answer the riddle.

However, in the Landmark section, you choose the country first and then you have to guess the landmark.

Both of the above are generated by AI using Prompts. Here’s the example of Prompt for Riddles.

Although the app’s functionality is based around Prompt, there’s one more type and that’s Native Function. Soon, we will learn about Native Functions in detail.

Native Function

Think of Native Functions in Semantic Kernel as the kitchen tools you use when following a recipe. These could be things like a blender, an oven or a knife. They’re the tools that allow you to interact with your ingredients (data) and transform them in different ways.

For example, let’s say you’re building a chatbot and one of your recipes (plugins) is for baking a cake. One of your steps (Prompts) might be to mix the ingredients together (combine data), but you can’t do that with your hands. You need a tool (Native Function) like a blender.

So, you could create a Native Function that takes your ingredients (data), uses the blender (performs an operation on the data) and returns a cake batter (the result of the operation).

Just like in cooking, having the right tools can make all the difference. That’s why Semantic Kernel allows you to create your own Native Functions. So, whether you’re baking a cake or building an application, you’ll always have the tools you need to get the job done.

Alright.. but how does it match?

You must be wondering as how does the matching of an answer work? Isn’t just a string comparison? The short answer is no. The reason is because we can have multiple variations of a single answer. For example, if the Riddle is I can be sweet or sour, I wear a natural crown, and I’m often seen in pies. What am I? and its obvious answer is Apple. However, there’s a chance that a person may write The Apple or An Apple, which is also true, isn’t it? So how does it work? I know some over smart thinkers would say that there are better ways to match it but this is not the intent of the post, right? The intent is in the title of this post and there’s an answer in below animation. This is where a concept of Embeddings and Cosine Similarity comes into play. Do not get overwhelmed by these terms as I have tried to explain in an easier way.

|

|

|---|

Embeddings

Computers don’t understand words and sentences the way humans do. So, we need a way to convert our text data into a numerical format that a computer can work with. That’s where embeddings come in.

An embedding takes a word or a piece of text and maps it to a high-dimensional vector of real numbers. The interesting part is that these vectors are created in such a way that words with similar meanings are placed close together in this high-dimensional space. This allows the machine learning model to understand the semantic similarity between different words or pieces of text.

For example, the words “cat” and “kitten” would be mapped to vectors that are close together in the embedding space, because they have similar meanings. On the other hand, the words “cat” and “car” would be mapped to vectors that are further apart, because they are semantically different.

Cosine Similarity

Cosine similarity is a way of comparing two things, but instead of comparing them directly, we compare the direction they’re heading. Imagine you and your friend are going on a trip. You’re starting from the same place but you might not be going to the same destination. The cosine similarity is a measure of how similar your destinations are based on the direction you’re heading.

In terms of vectors (which you can think of as arrows pointing from one point to another), the cosine similarity is calculated by taking the cosine of the angle between the two vectors. If the vectors are pointing in exactly the same direction, the angle between them is 0 degrees and their cosine similarity is 1. If they’re pointing in completely opposite directions, the angle is 180 degrees and their cosine similarity is -1. If they’re at right angles to each other (90 degrees), their cosine similarity is 0.

In programming or data science, we often use cosine similarity to compare different pieces of data. For example, if we have two documents and we want to see how similar they are, we can represent each document as a vector (where each dimension corresponds to a word, and the value in that dimension corresponds to how often that word appears in the document), and then calculate the cosine similarity between these two vectors.

As we see, the rapid evolution of the technology has significantly impacted the applications we use daily, paving the way for AI First Apps. Developers can use Semantic Kernel to effectively integrate AI into their own solutions without having to worry about the underlying architecture. This is just one of the basic examples but a forward to tackle the next level of the problem. I will try my best to cover up the next iteration soon with the concepts like Planners, Semantic Memory and so on.

Until next time.

Updated on 8th January, 2024: Semantic Functions have been renamed to Prompts.