When you start building AI agents, it doesn’t take long before you hit a wall and ask yourself, “How do I get my agents to talk to each other without reinventing the wheel every time?” Sure, you can handcraft APIs, define your own payload formats and pray the next developer follows your conventions. However, that’s a quick road to a spaghetti mess of integrations. That's why, I'd like to introduce (if you haven't heard about it yet) you A2A (Agent-to-Agent) protocol. A2A is an open standard that gives AI agents a common language to communicate, collaborate and orchestrate tasks… no matter who built them or what tech stack they use.

It’s the Lego brick of AI agents different shapes, colors, and purposes but all snapping together effortlessly.

Why do we need this?

AI agents are everywhere now (I know they are bad ones too) like customer services agents, data processors, travel planners and so on. They all work great (if designed well), but in silos. Now, imagine planning for a holiday and you want to use different tools to finalise your plan. This is where you'll feel the need with the agents:

- A flight booking agent finds your tickets

- A hotel booking agent sorts your stay

- An activities agent book your tours

These are great. They work quite well but you will never want to delegate your tourism tasks to hotel or flight agent and vice versa. Now if they do not have a shared protocol (Lego bricks), you would either:

- Build all the orchestration layer yourself (which is problematic because it's not scalable)

- Spend days reverse-engineering how someone else's agent works (not fun at all)

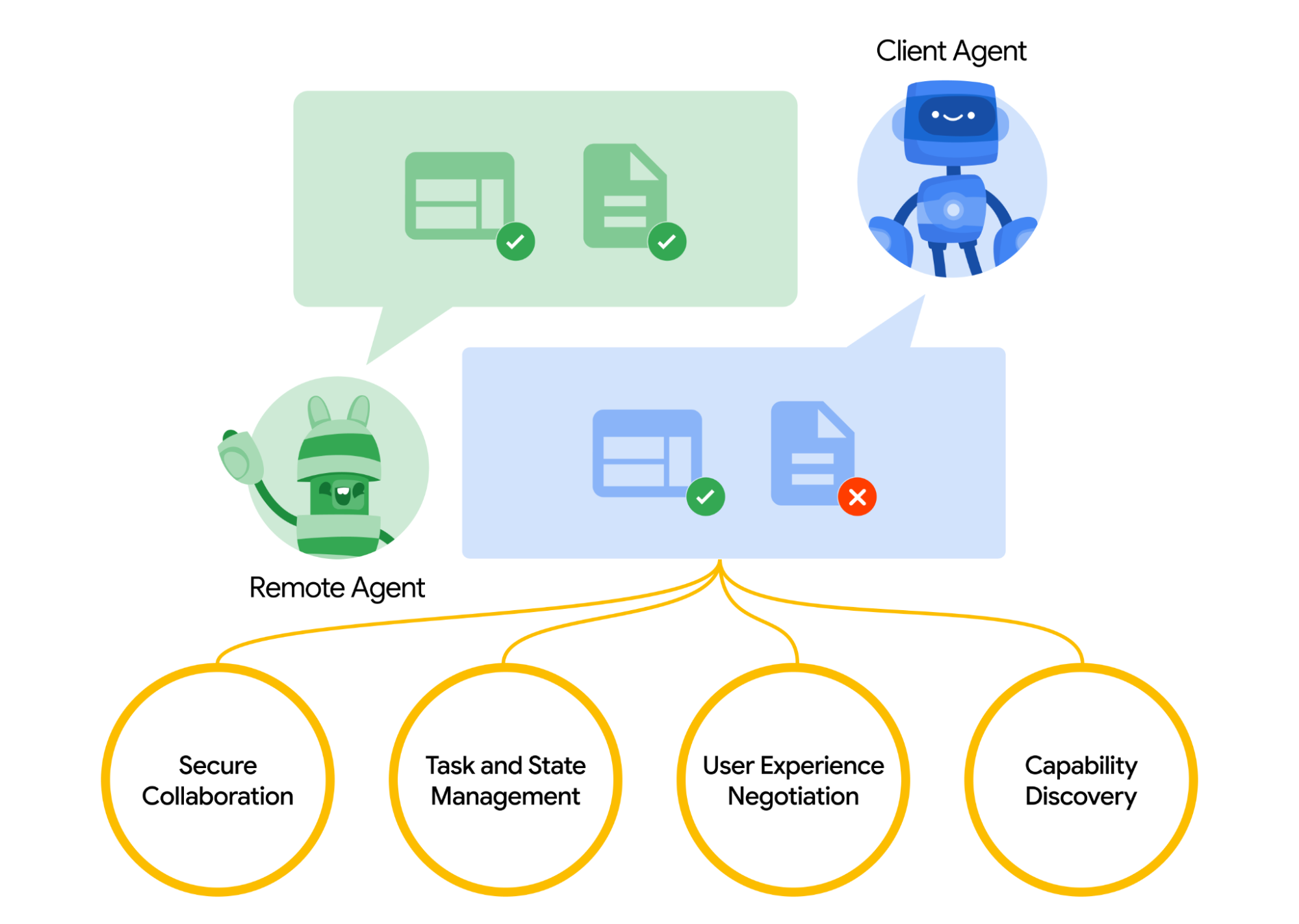

A2A solves this by letting agents share a standard "business card" about what they do and how to talk to them, so any agent can call another and get work done. This means your billing agent can collaborate with someone else's ticketing agent without either of you caring about the other's backend.

Core Concepts

At its heart, A2A is about:

- Discoverability - Agents publish an Agent Card (JSON file) with their name, skills, endpoint and authentication details.

- Interoperability - Communication is via JSON-RPC 2.0 over HTTPS. The structure is predictable, no surprises.

- Task Management - Agents can handle quick responses or long-running jobs via Task objects with statuses (working, completed, failed).

- Streaming Updates - Using Server-Sent Events (SSE), agents can push live progress or chunked results (great for large responses).

- Opaque Implementations - You never expose your internal code; just your public interface.

As this post is mainly about how I ended up building a demo and my workflow therefore, I do not want to talk about the 101 stuff because it's already covered by the awesome team at Google and Microsoft in A2A .NET SDK repo.

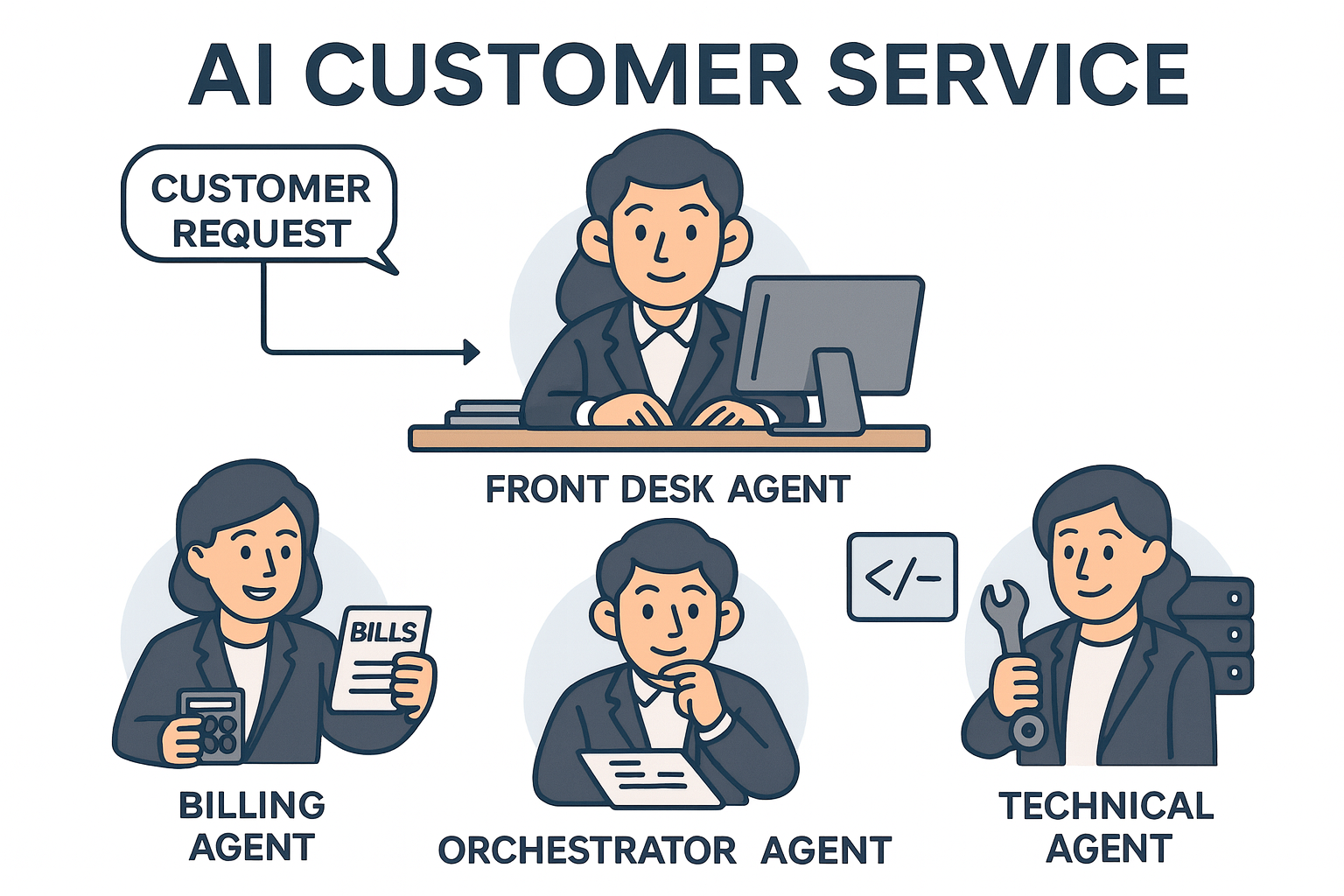

Agents in Customer Service

Now, let's have a walkthrough of a .NET app that uses A2A (Agent2Agent) Protocol and the a2a .NET SDK to orchestrate multiple AI agents for customer support. You’ll see how a front desk, billing, technical, and an orchestrator agent collaborate to acknowledge, route, resolve and synthesize responses for support tickets. All backed by Azure OpenAI via Semantic Kernel (yes, I fit SK everywhere).

Architecture overview

The architecture of this system is cleanly divided into backend and frontend components. The backend, built with ASP.NET Core, defines four main agents: Front Desk, Billing, Technical, and Orchestrator. Each agent is implemented in A2AAgents.cs and is responsible for a specific aspect of the customer service workflow. The Front Desk agent acknowledges incoming tickets and determines routing; the Billing and Technical agents handle domain-specific issues. Lastly, the Orchestrator agent synthesizes the responses from the specialists into a single, customer-friendly message. The LLM integration is handled by LLMService.cs, which uses Semantic Kernel to call Azure OpenAI Service but the design allows for easy swapping of the underlying LLM.

Before you judge my frontend capabilities, know that, this was mainly created by GitHub Spark and GitHub Copilot (4.1 Beast mode)

When a customer submits a ticket, the system initiates a three-layer processing pipeline. First, the Front Desk agent acknowledges the request and routes it to the appropriate specialists. Next, the Billing and/or Technical agents process the ticket in parallel, each generating a focused response. Finally, the Orchestrator agent combines these specialist responses into a unified reply, ensuring that the customer receives a clear and comprehensive answer. This entire flow is managed by A2ATicketService.cs, which coordinates agent assignment, status tracking, and response synthesis.

flowchart TD

A[Customer Submits Ticket] --> B[Front Desk Agent<br/>Acknowledge & Route]

B --> C1[Billing Agent<br/>Domain Response]

B --> C2[Technical Agent<br/>Domain Response]

C1 & C2 --> D[Orchestrator Agent<br/>Synthesize Responses]

D --> E[Final Customer Response]Each agent is exposed as an HTTP endpoint using the a2a .NET SDK's ASP.NET Core middleware. In Program.cs, you’ll find lines like app.MapA2A(frontDeskTaskManager, "/frontdesk"), which bind each agent’s TaskManager to a route. The agents implement the OnMessageReceived handler to process incoming A2A messages, transform them into actionable work (such as generating a response with the LLM), and return results in the A2A message format. This standardisation makes it easy to add new agents or swap out implementations without breaking the orchestration logic.

The frontend, built with React, communicates with the backend via a REST API defined in api.ts. It allows users to submit tickets, view agent statuses and track the progress of their requests. The backend supports both mock and real LLM modes, making it easy to develop and test locally without requiring Azure OpenAI credentials. Switching between modes is as simple as toggling a configuration value or calling the /toggle-implementation endpoint.

To run the sample, restore and start the backend with dotnet run from the backend directory, and launch the frontend with npm run dev from the frontend directory. By default, the system runs in mock mode but you can enable real LLM integration by providing your Azure OpenAI endpoint and key as environment variables. README has all the details!

Rooms for improvements

This app is mainly for development / prototyping purpose therefore, it has a lot of room for improvements as per the best practices and the protocol specifications. If you want to extend it and take it to production then this is what I'd recommend:

- Agent Card Completeness: I have not added all the details and it is advised that you must add id/tags etc.

- Security: There must have been a proper authentication mechanisms in place.

- Discovery: Well-known exposure is required. Read protocol specs for this one.

- Task lifecyle/streaming: For longer tasks, this will become evident and A2A supports SSE (Service Side Events) so you can always make use of this feature.

A2A vs. MCP - Mates!

One common question: “Isn’t MCP also a protocol? Do I have to choose?”

Nope.

- MCP = How an agent talks to its own tools and APIs.

- A2A = How agents talk to each other.

In sophisticated systems, you’ll often see both, an A2A agent delegates a task to another agent, which uses MCP to call its own tools and return the result.

The beauty of A2A is that it takes something messy and turns it into something predictable. A clean handshake between agents, no matter who built them or what stack they’re running on. For us as developers, it means we can stop obsessing over glue code and start focusing on the real value our agents deliver.

In the same way HTTP made the web possible, A2A is laying the groundwork for an interconnected agent world. Whether you’re building a billing agent in .NET, a travel planner in Python or a support desk agent in Node.js, the protocol lets them all speak the same language and get work done together.

In the end, AI isn’t about building a single, perfect agent. It’s about creating an ecosystem where agents collaborate, not compete. And with A2A, we finally have the blueprint to make that happen.

Until next time.