As it's announced today at Build 2019 and I am happy to have contributed my bits to this specific project, Microsoft wants you to build customized digital assistants tailored to your brand, personalized to your users and make them available across a broad range of canvases and devices.

Continuing their open-sourced approach towards the Bot Framework SDK, the open-source Virtual Assistant solution provides you a set of core foundation capabilities and full control over the end user experience.

Among these capabilities, Skills are re-usable conversational building blocks (common features) which require each developer to build themselves and add extensive functionality to a Bot.

As this post does not talk about the concepts in details, I just want to highlight a few major components of the Skills.

As this post does not talk about the concepts in details, I just want to highlight a few major components of the Skills.

Voice enabled Virtual Assistant integrated into a hotel-room device providing a broad range of Hospitality focused scenarios (e.g. extend your stay, request late checkout, room service) including concierge and the ability to find local restaurants and attractions. Optional linking to your Productivity accounts open up more personalized experiences such as suggested alarm calls, Weather warnings and learning of patterns across stays. An evolution of the current TV personalization experienced in room today.

Overview

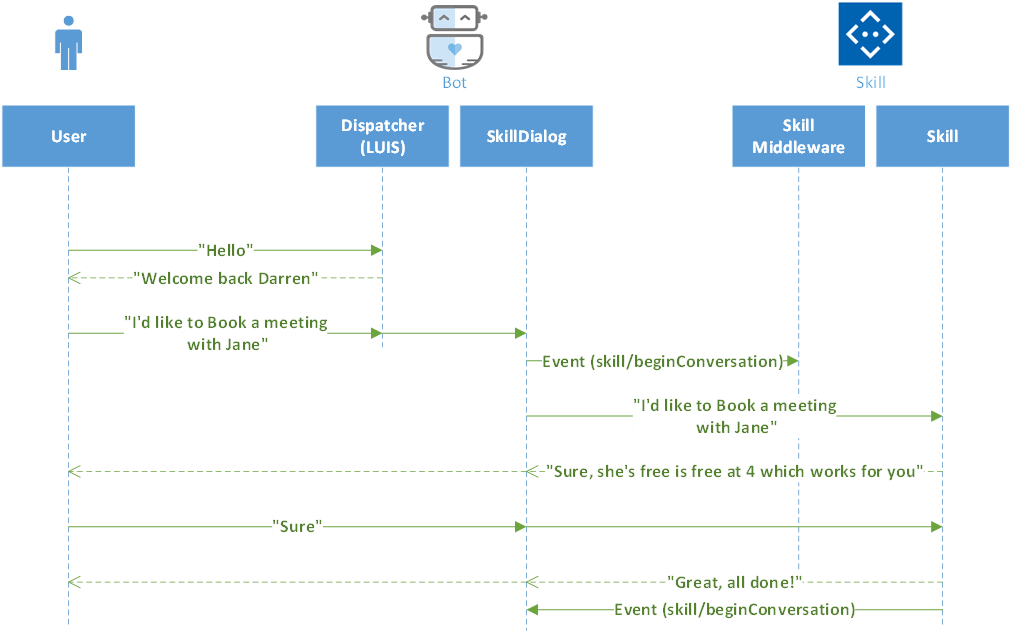

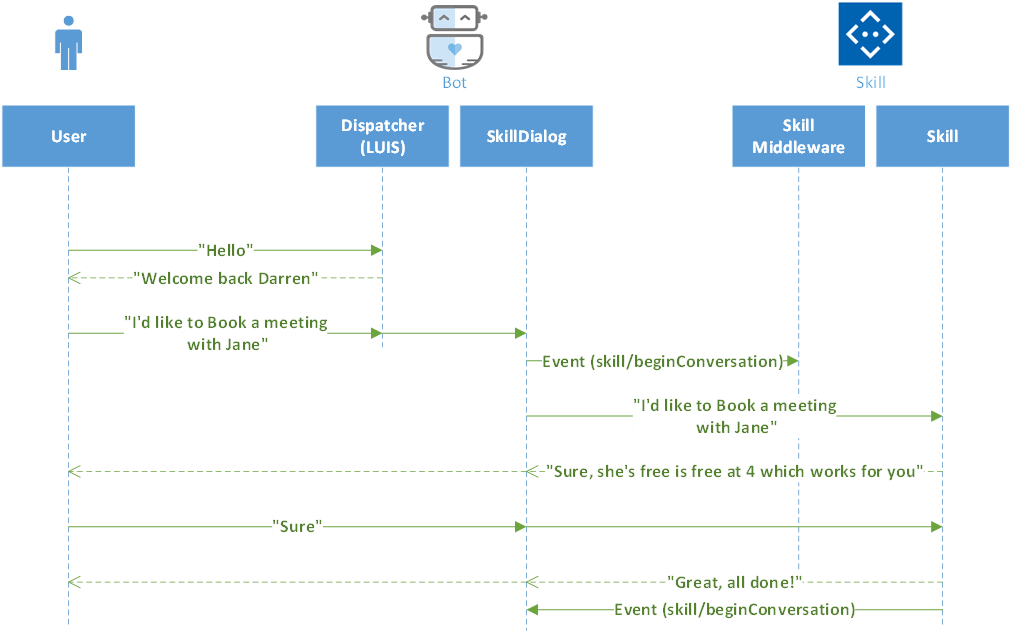

Skill is like a normal SDK V4 bot with an added value of being plugged into a broader/enterprise kind of a solution. The only thing which makes it different is its invocation pattern from another bot. Microsoft has already come up with a few ready-to-deploy Skills for common scenarios such as Calendar, Email, Point of Interest etc. However, if you want to build up your own, it's easier than building a paper rocket!Currently, you can only create/develop Skills in C# but you can always invoke the C# based skills from TypeScript bot.The key design goal for the Skill was to maintain the consistency with the Activity protocol of a normal SDK 4 bot by leveraging the power of Dispatcher tool. Below is the overview of the Invocation Flow in which Bot starts a SkillDialog which abstracts the skill invocation mechanics.

As this post does not talk about the concepts in details, I just want to highlight a few major components of the Skills.

As this post does not talk about the concepts in details, I just want to highlight a few major components of the Skills.

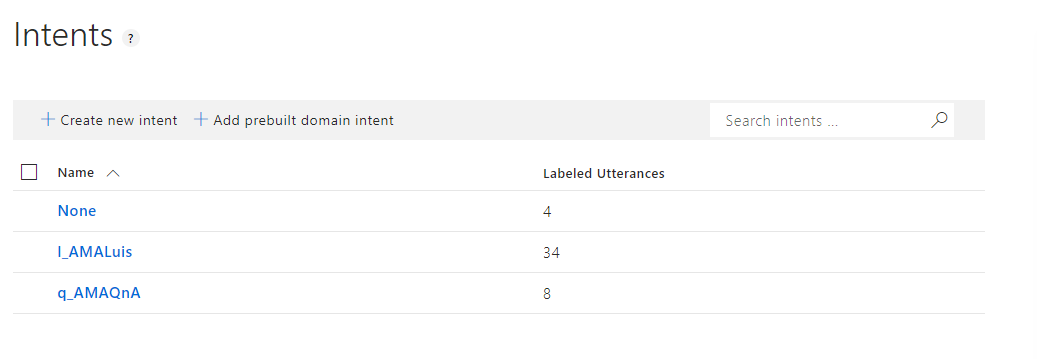

- Dispatcher - With the use of Skill CLI, it's main role is to understand and best process the given utterance

- Skill Dialog - Heart of a skill. All the communication between Virtual Assistant and Skill is done through this. All the communication between a Virtual Assistant and the Skill is done through this once dispatcher identifies a skill that is mapped to a user utterances.

- Skill Manifest - This manifest provides all of the metadata required for a calling Bot to know when to trigger invoking a skill and what actions it provides. The manifest is used by the Skill command-line tool to configure a Bot to make use of a Skill.

- Skill Middleware - Used by each skill, the begin event is also sent through this middleware.

Your Bot as a Skill

The team at Microsoft has done a tremendous job in keeping the consistent behavior of bots and allowing you to enable your bot to be a Skill so that it can be added to other bots by just adding some configuration. Before I get into the details of this, I would like you to tell you that if you want your bot to act as Skill, there's a Skill Template which can be used to create a skill without any manual work. However, if you really want to use your existing bot and enable / expose it as Skill manually then you should follow the below steps;1. Pre-requisites / NuGet Packages

You should add Microsoft.Bot.Builder.Solutions and Microsoft.Bot.Builder.Skills packages to your solution.2. CustomSkillAdapter.cs (Adapter)

You should now create a CustomSkillAdapter which inherits SkillAdapter and ensure that the SkillMiddleware is added to your project;public class CustomSkillAdapter : SkillAdapter

{

public CustomSkillAdapter(

BotSettings settings,

ICredentialProvider credentialProvider,

BotStateSet botStateSet,

ResponseManager responseManager,

IBotTelemetryClient telemetryClient,

UserState userState)

: base(credentialProvider)

{

// ...

Use(new SkillMiddleware(userState));

}

}

3. Startup.cs

In your Startup.cs file, add these two lines;services.AddTransient<IBotFrameworkHttpAdapter, DefaultAdapter>(); services.AddTransient<SkillAdapter, CustomSkillAdapter>();

3. Controller.cs (Add Skill Controller)

Now you have to update your Bot Controller like below;[ApiController]

public class BotController : SkillController

{

public BotController(IServiceProvider serviceProvider, BotSettingsBase botSettings)

: base(serviceProvider, botSettings)

{ }

}

4. manifestTemplate.json (Manifest Template)

As discussed earlier, this manifest provides all of the metadata required for a calling Bot to know when to trigger invoking a skill and what actions it provides. You can now create a manifestTemplate.json file in the root directory of your bot and ensure that all the parent (root level, such as name, description, icon, actions etc.. ) details are completed.{

"id": "",

"name": "",

"description": "",

"iconUrl": "",

"authenticationConnections": [ ],

"actions": [

{

"id": "",

"definition": {

"description": "",

"slots": [ ],

"triggers": {

"utteranceSources": [

{

"locale": "en",

"source": [

"luisModel#intent"

]

}

]

}

}

}

]

}

Add Skills in Your Bot

The easiest way to get started with using Skills is to create a Bot with Virtual Assistant Template but I totally understand that there are several cases where you just want to use the Skills in your light-weight bot instead of creating a heavy project. Hence, this short tutorial will guide how can you manually add the Skill capability in your bots.1. Pre-requisites / NuGet Packages

You should add Microsoft.Bot.Builder.Solutions and Microsoft.Bot.Builder.Skills packages to your solution.2. Skills.json (Skill Configuration)

The Microsoft.Bot.Builder.Skills package exposes SkillManifest type that actually describes a Skill. Your bot should be responsible for maintaining the collection of all the Skills. I would highly encourage you to have a look at the code for Virtual Assistant Template as it implements Skills.json which maintains the collection and as part of configuration, deserializes the registered Skills from the code.public List<SkillManifest> Skills { get; set; }

3. Startup.cs (Skill Dialog Registration)

You can now register the Skill Dialog of all your registered Skills in your Startup.cs file // Register skill dialogs

services.AddTransient(sp =>

{

var userState = sp.GetService<UserState>();

var skillDialogs = new List<SkillDialog>();

foreach (var skill in settings.Skills)

{

var authDialog = BuildAuthDialog(skill, settings);

var credentials = new MicrosoftAppCredentialsEx(settings.MicrosoftAppId, settings.MicrosoftAppPassword, skill.MSAappId);

skillDialogs.Add(new SkillDialog(skill, credentials, telemetryClient, userState, authDialog));

}

return skillDialogs;

});

If any of your Skills requires authentication then you should create an associated MultiProviderAuthDialog as implemented here;

// This method creates a MultiProviderAuthDialog based on a skill manifest.

private MultiProviderAuthDialog BuildAuthDialog(SkillManifest skill, BotSettings settings)

{

if (skill.AuthenticationConnections?.Count() > 0)

{

if (settings.OAuthConnections.Any() && settings.OAuthConnections.Any(o => skill.AuthenticationConnections.Any(s => s.ServiceProviderId == o.Provider)))

{

var oauthConnections = settings.OAuthConnections.Where(o => skill.AuthenticationConnections.Any(s => s.ServiceProviderId == o.Provider)).ToList();

return new MultiProviderAuthDialog(oauthConnections);

}

else

{

throw new Exception($"You must configure at least one supported OAuth connection to use this skill: {skill.Name}.");

}

}

return null;

}

4. MainDialog.cs (Route Utterances to Skills)

First of all, you need to verify that the registered Skill Dialogs are added to the Dialog Stack. You can add them by following code;foreach (var skillDialog in skillDialogs)

{

AddDialog(skillDialog);

}

Once your Dispatcher has been executed and returned the intent, you should check whether it matches any Action within a Skill by following the below code-snippet. If it returns true, then you should handle it appropriately to route it to correct skill. Otherwise a normal intent logic would follow

// Identify if the dispatch intent matches any Action within a Skill if so, we pass to the appropriate SkillDialog to hand-off

var identifiedSkill = SkillRouter.IsSkill(_settings.Skills, intent.ToString());

if (identifiedSkill != null)

{

// We have identiifed a skill so initialize the skill connection with the target skill

// the dispatch intent is the Action ID of the Skill enabling us to resolve the specific action and identify slots

await dc.BeginDialogAsync(identifiedSkill.Id, intent);

// Pass the activity we have

var result = await dc.ContinueDialogAsync();

if (result.Status == DialogTurnStatus.Complete)

{

await CompleteAsync(dc);

}

}

else

{

// Your normal intent routing logic

}

You can always reach out to me in case of any questions but I would also recommend you to have a look and post (if necessary) at the StackOverflow.

Happy coding!